Building Semantic Search in Drupal with Milvus: A Complete Step-by-Step Guide

Building Semantic Search in Drupal with Milvus: A Complete Step-by-Step Guide

The advent of ChatGPT has escalated search in recent years. Gone are the days where a user had to be thoughtful about tight keyword searches. They expect to be able to dump whatever they are thinking into a search bar and get relevant results. This is a luxury, normalised by tech giants such as Google and OpenAI. But even though very few have the budget these companies have, that doesn't mean websites/businesses can't provide a superior search experience.

In comes Semantic Search. Where traditional search matches words, Semantic search matches meaning.

Your (Drupal) site can now find relevant content even when the exact words don't match (without the enterprise price tag). It understands that "CMS," "content management system," and "website administration tool" all refer to the same concept.

This is a step-by-step guide to getting semantic search up and running locally on your Drupal site, powered by Milvus (what a name) vector database and OpenAI embeddings. We'll walk through every step from Docker setup to your first semantic query.

Why Semantic Search Matters

Semantic search uses machine learning to understand the meaning and context behind search queries, not just keyword matches. Here's what that means for your site:

Better User Experience: Users find what they're looking for even when they phrase questions differently than your content. Natural language queries like "how do I reset my password?" work just as well as keyword searches.

Reduced "No Results" Frustration: Semantic search finds conceptually similar content even when exact keywords don't match. Your help documentation becomes actually helpful.

Smarter Content Discovery: Related content surfaces based on meaning and context, not just shared keywords. Users discover relevant resources they wouldn't have found otherwise.

Real-World Use Cases:

- Documentation sites where users ask questions in many different ways

- Knowledge bases that need to surface relevant articles regardless of wording

- E-commerce sites helping customers find products through natural descriptions

- Content-heavy sites where traditional search creates too much noise

How It Works (I think)

When you save content, AI converts it into a mathematical representation called an "embedding" - a list of numbers that captures the meaning. These embeddings get stored in a vector database (Milvus in our case). When someone searches, their query gets converted to an embedding using the same AI model, then Milvus finds content with similar embeddings using mathematical similarity. Similar meanings have similar numbers, so the maths finds semantically related content even when the words differ.

You don't need to understand the maths. You just need to know it works remarkably well.

Prerequisites

Before we dive in, make sure you have:

- Drupal 10 or 11 installation - A working Drupal site (local development is fine) - I used DrupalCMS for this.

- Docker installed - For running ddev + Milvus locally (install Docker here)

- OpenAI API key - Or another LLM provider supported by the Drupal AI module (get OpenAI API key)

- Basic familiarity with Drupal - You should know how to install modules and navigate the admin interface

- Command line comfort - Basic terminal/command prompt usage

Estimated time: 1-2 hours for complete setup

Cost consideration: OpenAI's text-embedding-3-small model is very inexpensive (around $0.02 per million tokens). For a typical site with a few hundred pages, initial indexing might cost a few cents, with ongoing costs minimal unless you're constantly creating massive amounts of content.

Step 1: Setting Up Milvus Locally

Milvus is an open-source vector database specifically designed for storing and searching embeddings. We'll run it locally using Docker with Attu (Milvus's web UI) for easy management.

The Drupal AI module provides excellent documentation for setting up Milvus locally.

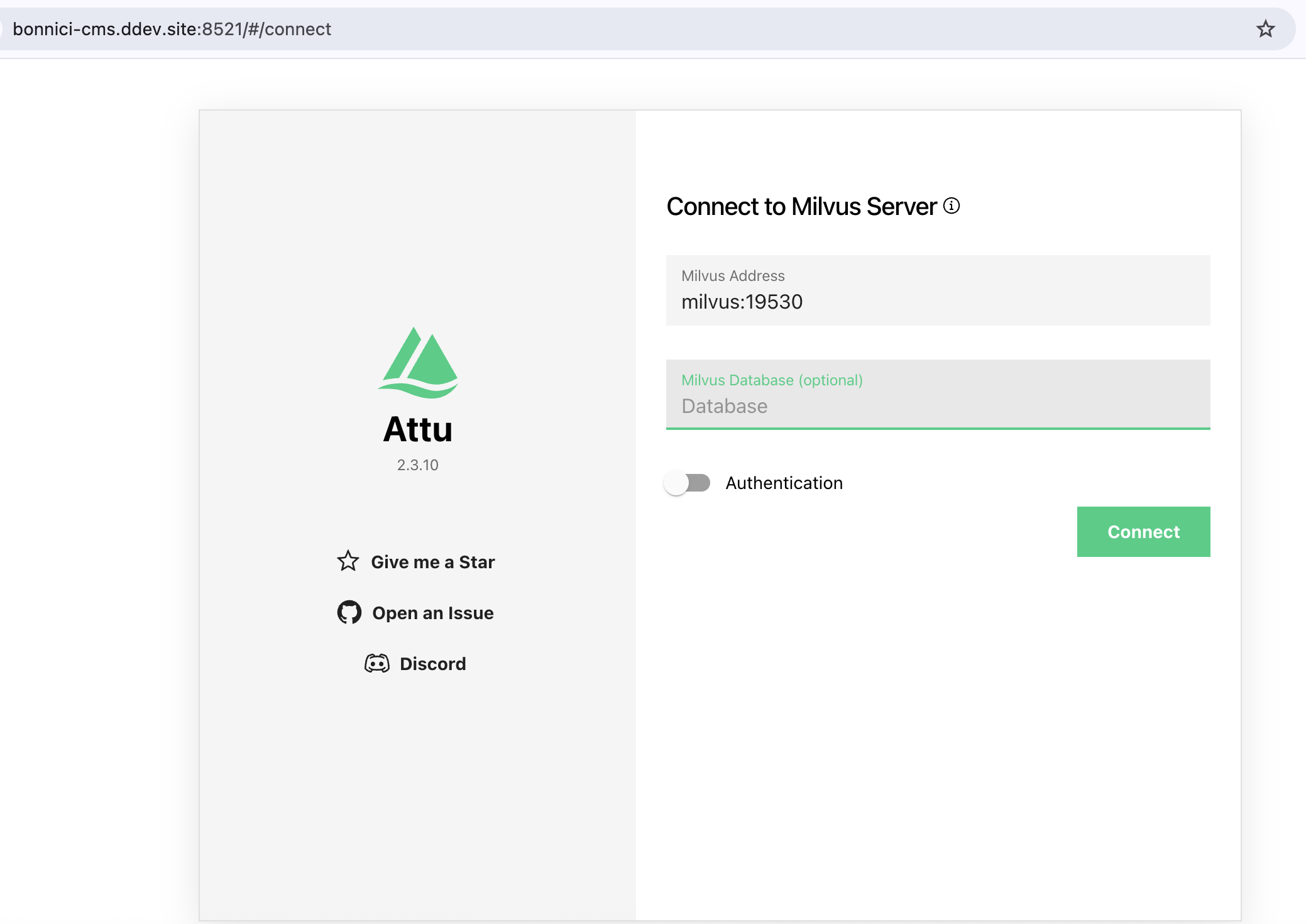

Once running, open Attu (the Milvus web interface) at http://localhost:8521. You should see the Attu connection screen:

The Attu interface lets you manage Milvus visually. Connect using milvus:19530 for the address.

The Attu interface lets you manage Milvus visually. Connect using milvus:19530 for the address.

Connection details:

- Milvus Address:

milvus:19530(when connecting from within Docker) orlocalhost:19530(from your host machine) - Database: Leave as "Database" or create a named database

- Authentication: Off for local development

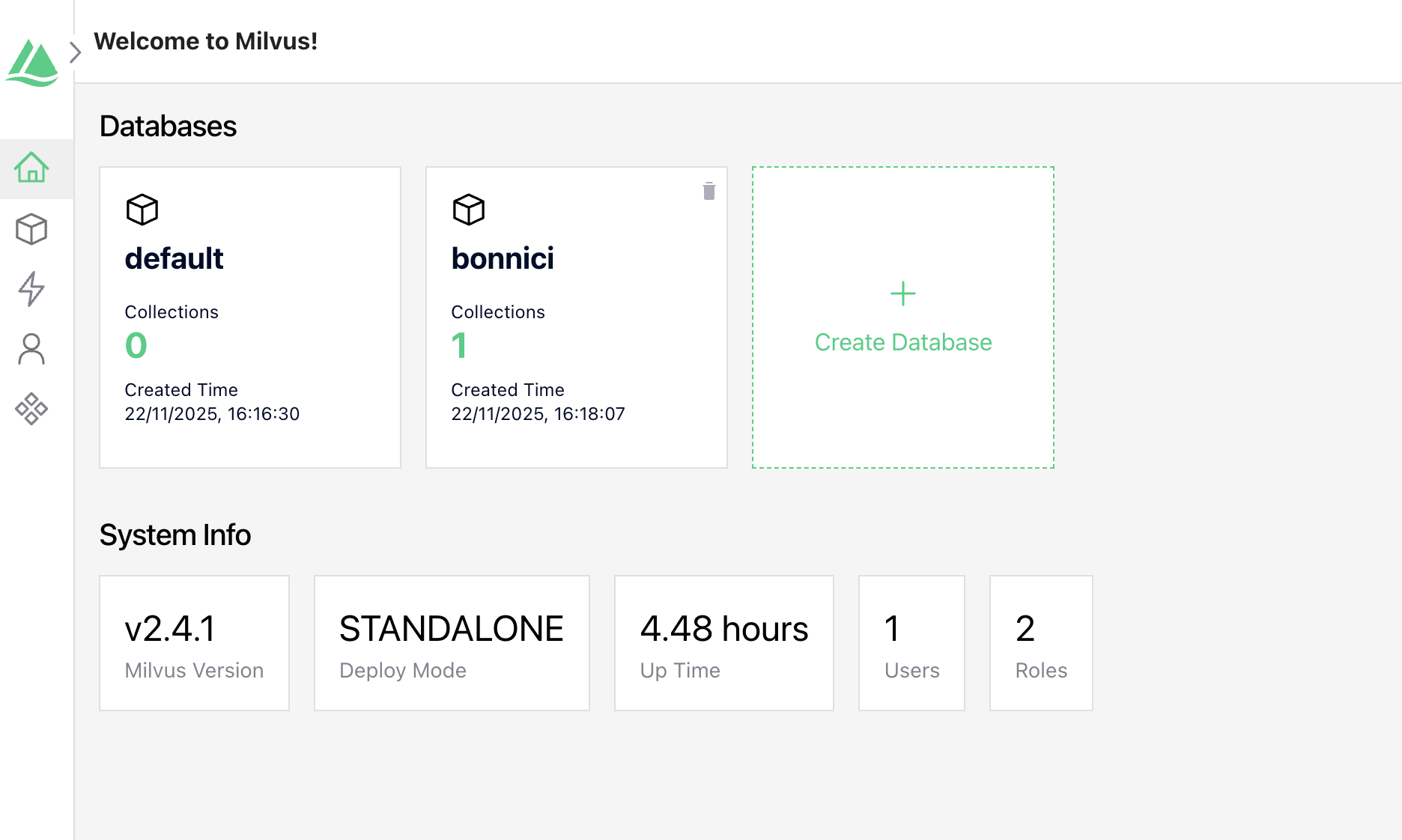

Click "Connect" and you'll see the Milvus dashboard:

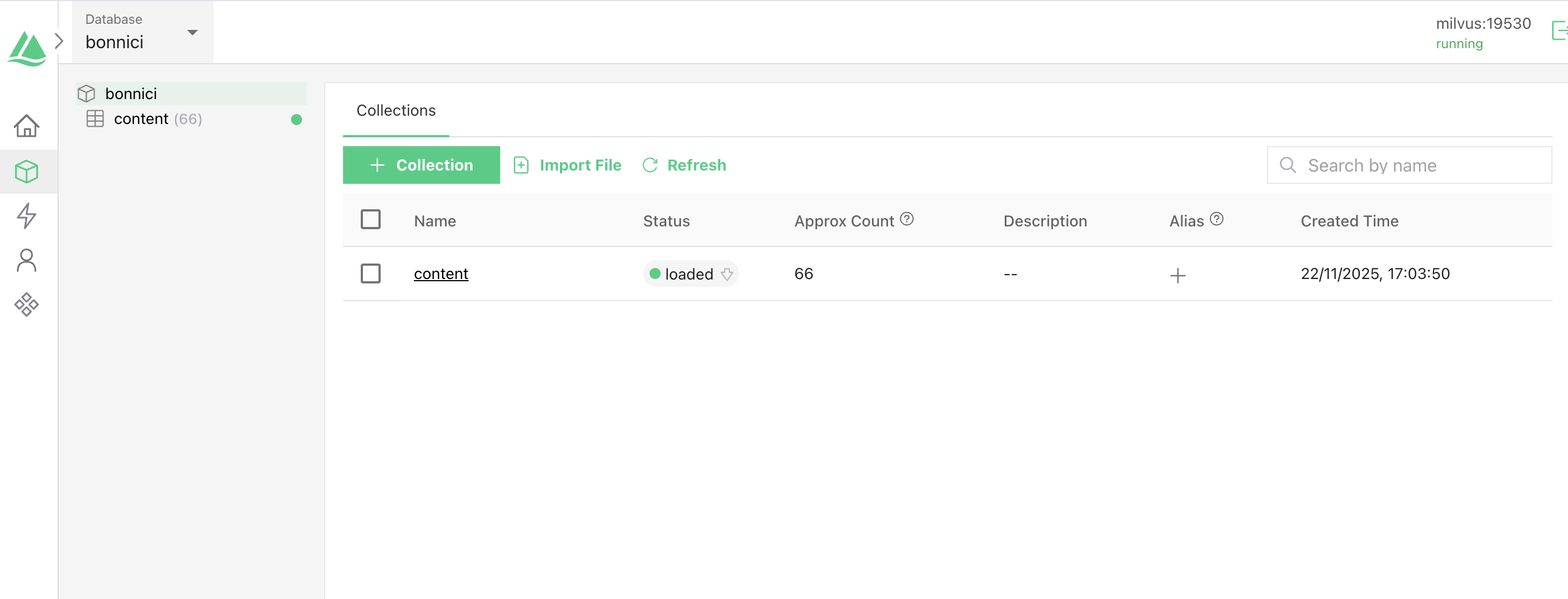

Milvus dashboard showing available databases. We'll create a "bonnici" database for our content.

Milvus dashboard showing available databases. We'll create a "bonnici" database for our content.

For detailed configuration options, troubleshooting, and production deployment guidance, check out the official Milvus setup guide.

Step 2: Installing Required Drupal Modules

Now that Milvus is running, let's install the Drupal modules that make semantic search possible.

Required Modules

You'll need these modules:

- ai - The core Drupal AI module providing AI integration framework

- ai_search - Provides Search API backend for AI-powered semantic search

- ai_provider_openai - OpenAI provider for generating embeddings

- ai_vdb_provider_milvus - Milvus vector database integration

- search_api - Drupal's powerful search framework (if not already installed)

Installation via Composer

composer require drupal/ai drupal/ai_provider_openai drupal/ai_vdb_provider_milvus drupal/search_api

Enable the Modules

Using Drush:

drush en ai_search ai_provider_openai ai_vdb_provider_milvus search_api -y

Or enable them through the Drupal admin interface at Extend (/admin/modules).

What each module does:

- ai: Provides the framework for AI integrations in Drupal, including providers and automators

- ai_search: Creates a Search API backend that uses AI embeddings and vector databases

- ai_provider_openai: Connects to OpenAI's API for generating text embeddings

- ai_vdb_provider_milvus: Stores and retrieves embeddings from Milvus vector database

- search_api: Provides the search infrastructure that ai_search builds upon

Step 3: Configuring the AI Provider (OpenAI)

Before we can generate embeddings, we need to configure the OpenAI provider with your API key.

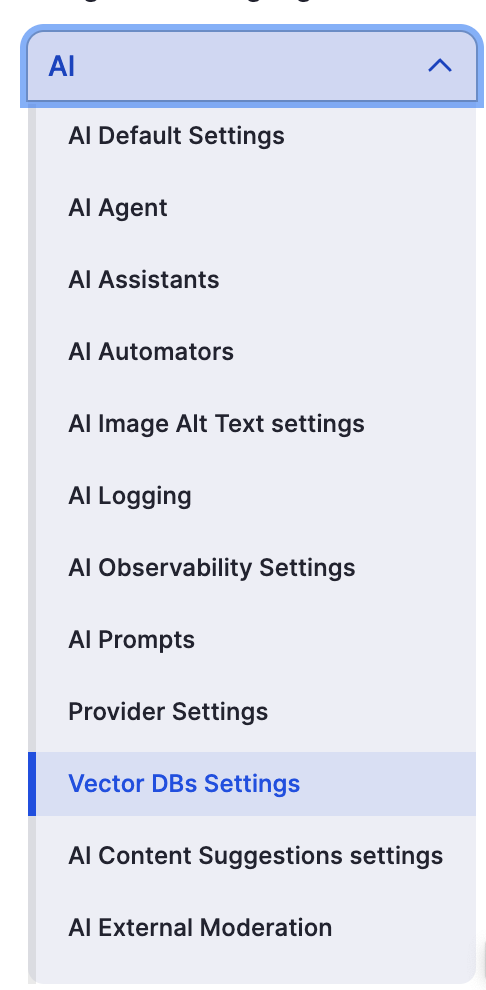

Navigate to Configuration > AI > Provider Settings (/admin/config/ai/providers):

The AI configuration menu shows all available AI-related settings. We need Vector DBs Settings and Provider Settings.

The AI configuration menu shows all available AI-related settings. We need Vector DBs Settings and Provider Settings.

Configure your OpenAI API key and select the embedding model. For semantic search, you'll want to use text-embedding-3-small - it's fast, accurate, and cost-effective.

Why text-embedding-3-small? This model creates 1536-dimensional embeddings that capture semantic meaning efficiently. It's optimised for retrieval tasks (like search), costs very little, and provides excellent results for most use cases. The "small" version is actually quite powerful and significantly cheaper than larger models while maintaining high quality.

Make sure to save your provider configuration before moving on. Just to be sure everything looks good, make sure all the appropriate models are set in the default AI settings.

Step 4: Configuring Milvus Vector Database

Now let's tell Drupal how to connect to your local Milvus instance.

Navigate to Configuration > AI > Vector DBs Settings (/admin/config/ai/vdb_providers):

Vector Database Settings page where we configure Milvus connection details.

Vector Database Settings page where we configure Milvus connection details.

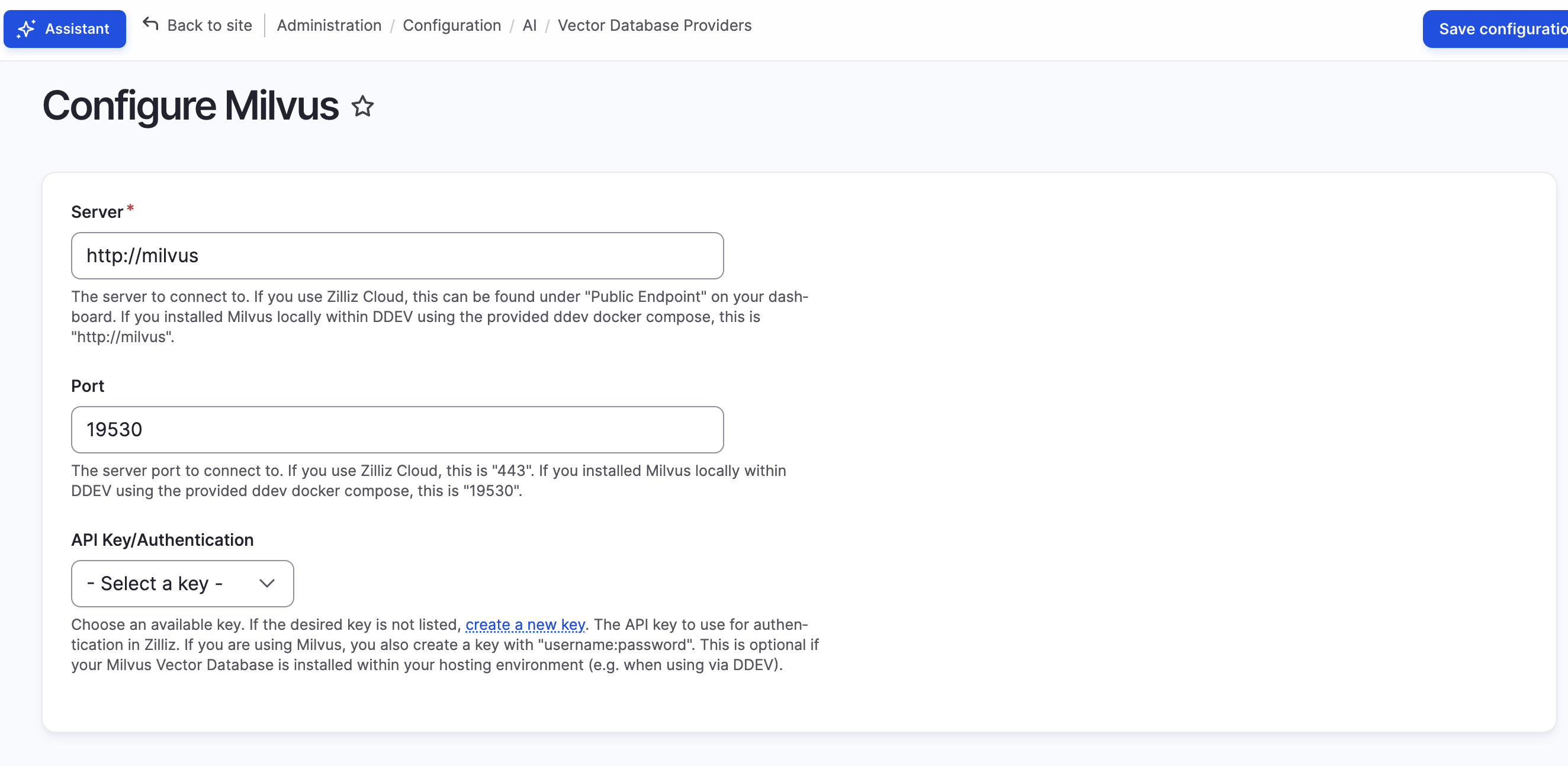

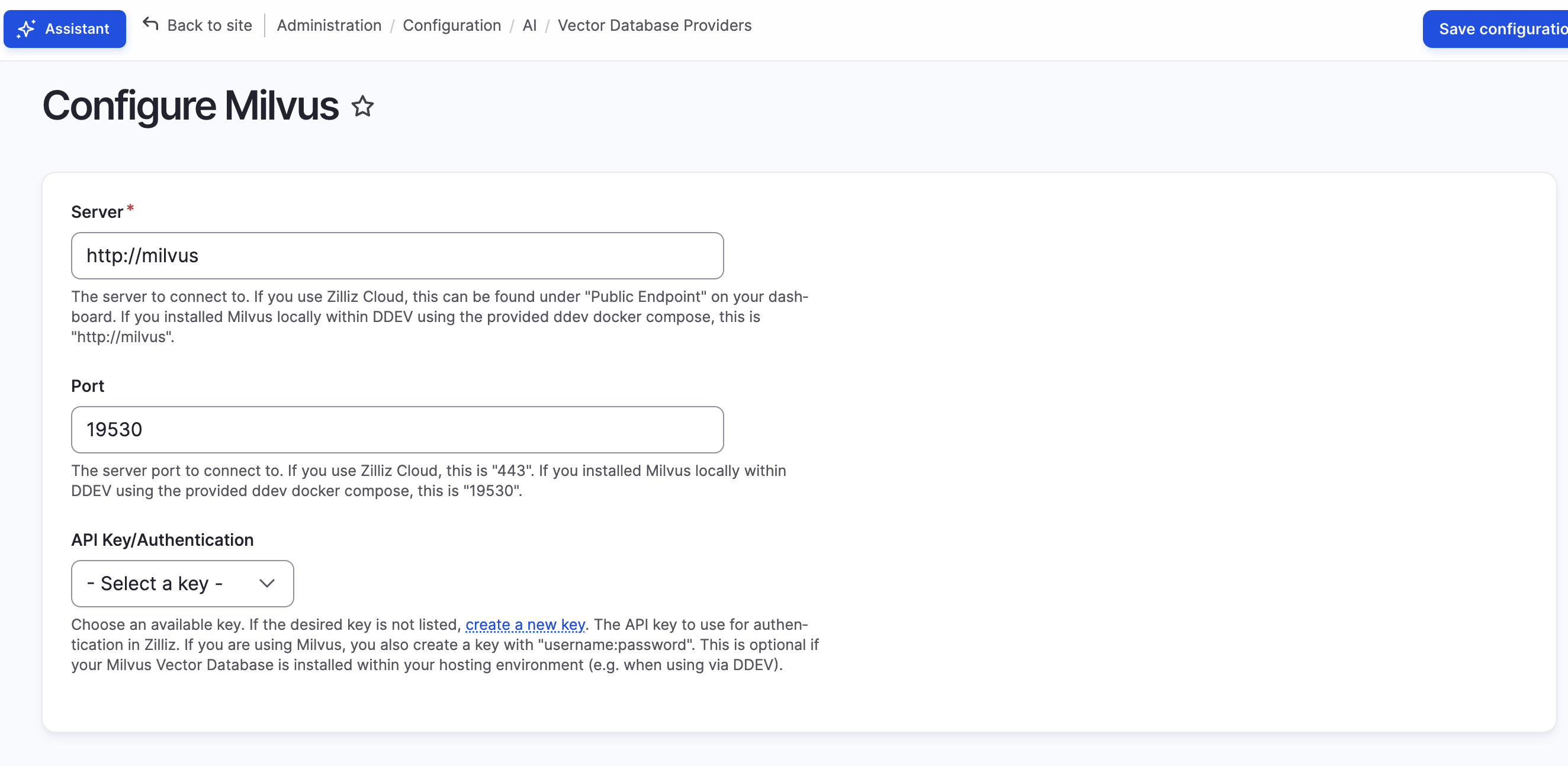

Click "Configure Milvus" and you'll see the configuration form:

Configure your Milvus connection with server address, port, and database details.

Configure your Milvus connection with server address, port, and database details.

Configuration details:

- Server:

http://milvus(if Drupal is in the same Docker network) orhttp://localhost(if Drupal is on your host machine) - Port:

19530(Milvus's default port) - Database Name:

bonnici(or whatever you named your Milvus database - this will be created automatically if it doesn't exist) - Collection:

content(this will store your content embeddings - also auto-created) - API Key/Authentication: Leave empty for local development

About Similarity Metrics: When you configure the search server later, you'll choose "Cosine Similarity" as the similarity metric. This measures the angle between embedding vectors, making it excellent for text similarity regardless of content length. Other options include Euclidean distance (L2) and Inner Product (IP), but Cosine works best for most semantic search use cases.

Save the configuration and Drupal will be able to communicate with Milvus.

Your Milvus database with the content collection ready to store embeddings.

Your Milvus database with the content collection ready to store embeddings.

Step 5: Creating the Search Server

With AI and Milvus configured, we can now create a Search API server that uses AI-powered semantic search.

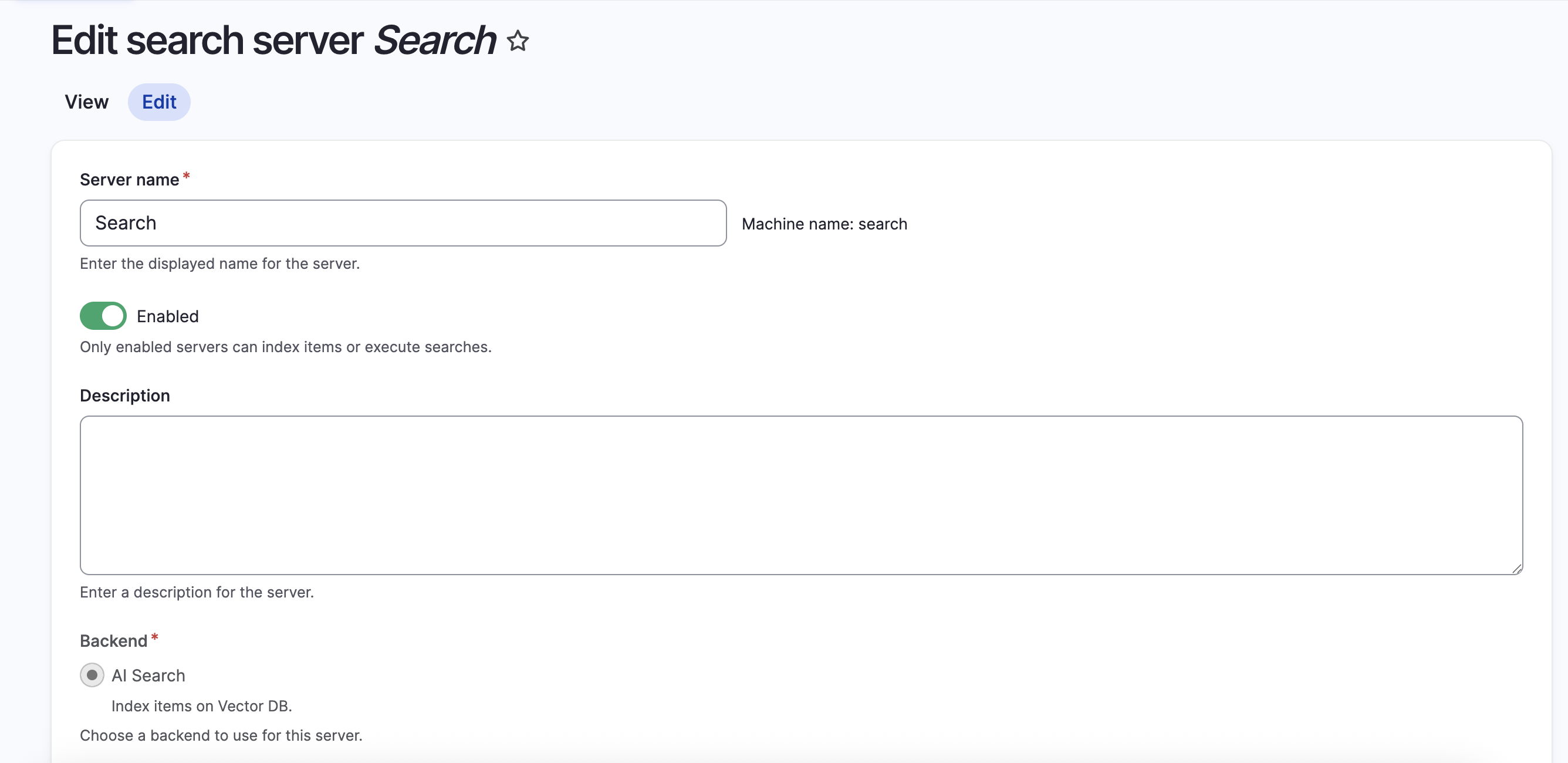

Navigate to Configuration > Search and metadata > Search API (/admin/config/search/search-api) and click "Add Server."

Creating a search server with the AI Search backend.

Creating a search server with the AI Search backend.

Server configuration:

- Server name:

Search(or any descriptive name) - Backend: Select AI Search (this is provided by the ai_search module)

- Enabled: Check this box

After selecting the AI Search backend (if you haven't set up a provider, make sure you set up OpenAI or something of the likes before this is available), you'll see additional configuration options:

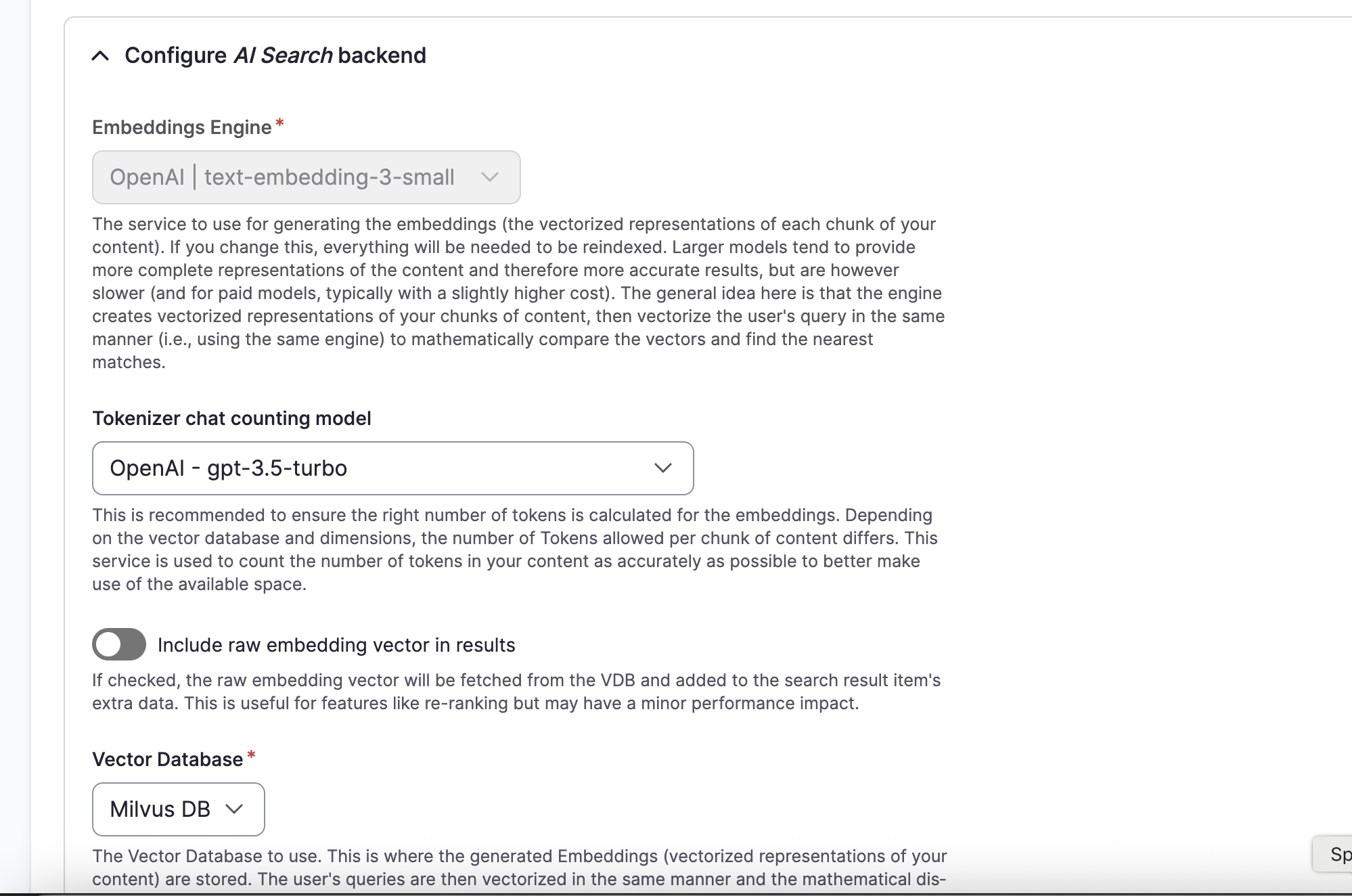

Configure the AI Search backend with your embedding engine, tokenizer, and vector database.

Configure the AI Search backend with your embedding engine, tokenizer, and vector database.

Backend settings explained:

-

Embeddings Engine:

OpenAI | text-embedding-3-small- This is the AI model that converts your content into embeddings (vector representations) -

Tokenizer chat counting model:

OpenAI - gpt-3.5-turbo- This model counts tokens to ensure content chunks fit within the embedding model's limits. Different vector databases support different embedding dimensions, so accurate token counting prevents errors. -

Include raw embedding vector in results: Leave unchecked unless you need the raw vectors for re-ranking or custom processing (adds minor performance overhead)

-

Vector Database:

Milvus DB- Select the Milvus database you configured earlier

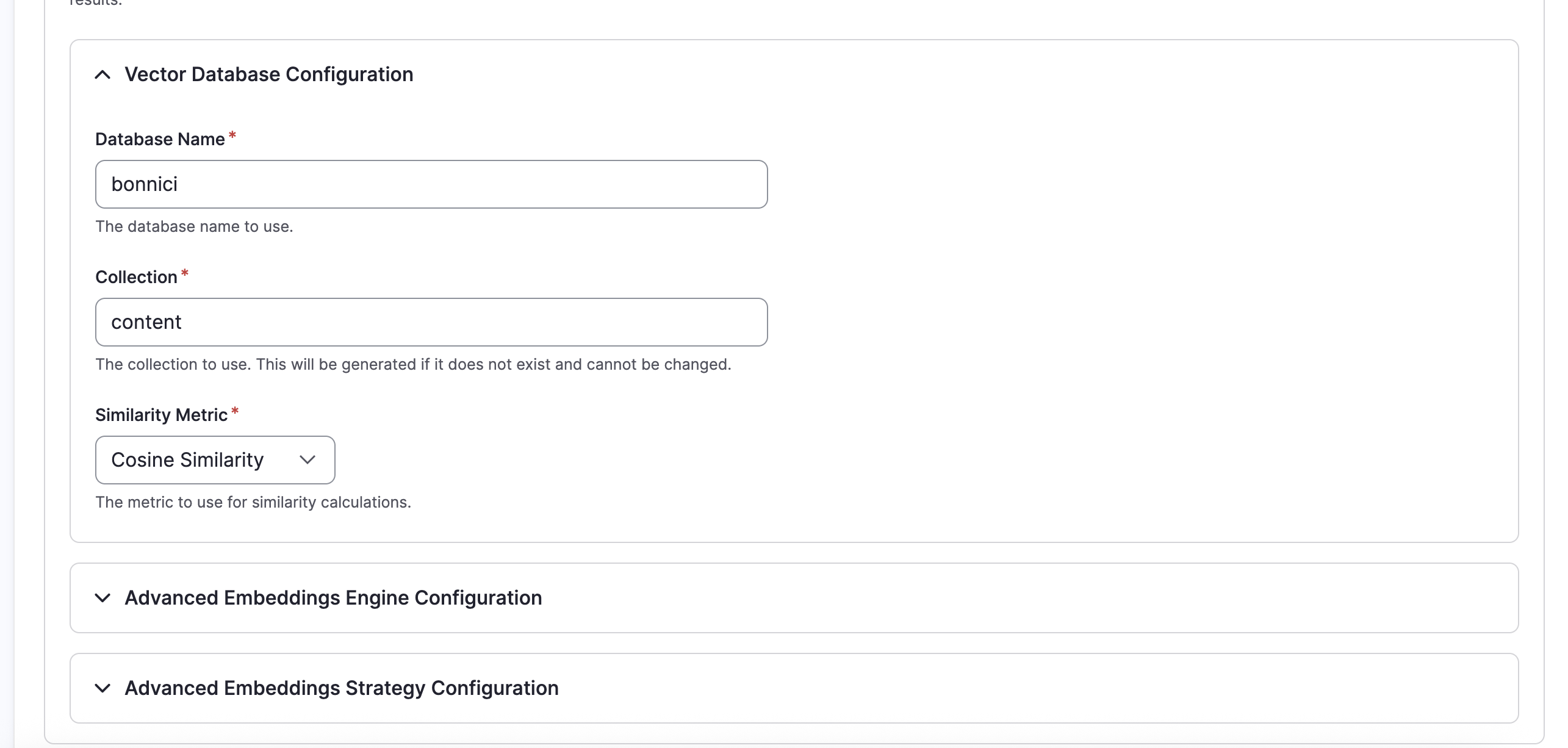

Vector Database Configuration

Specify which Milvus database and collection to use for storing embeddings.

Specify which Milvus database and collection to use for storing embeddings.

- Database Name:

bonnici- The Milvus database name (must match what you configured earlier) - Collection:

content- The collection within that database where embeddings will be stored - Similarity Metric:

Cosine Similarity- How Milvus calculates similarity between embeddings

Behind the scenes: When you index content, Drupal sends it to OpenAI's embedding API, receives back a 1536-dimensional vector, and stores that vector in Milvus along with the content ID. When someone searches, their query becomes a vector using the same process, then Milvus finds the most similar content vectors using cosine similarity maths.

Save your server configuration.

Step 6: Creating the Search Index

Now we need to create an index that tells Drupal what content to convert into embeddings.

From the Search API page, click "Add Index."

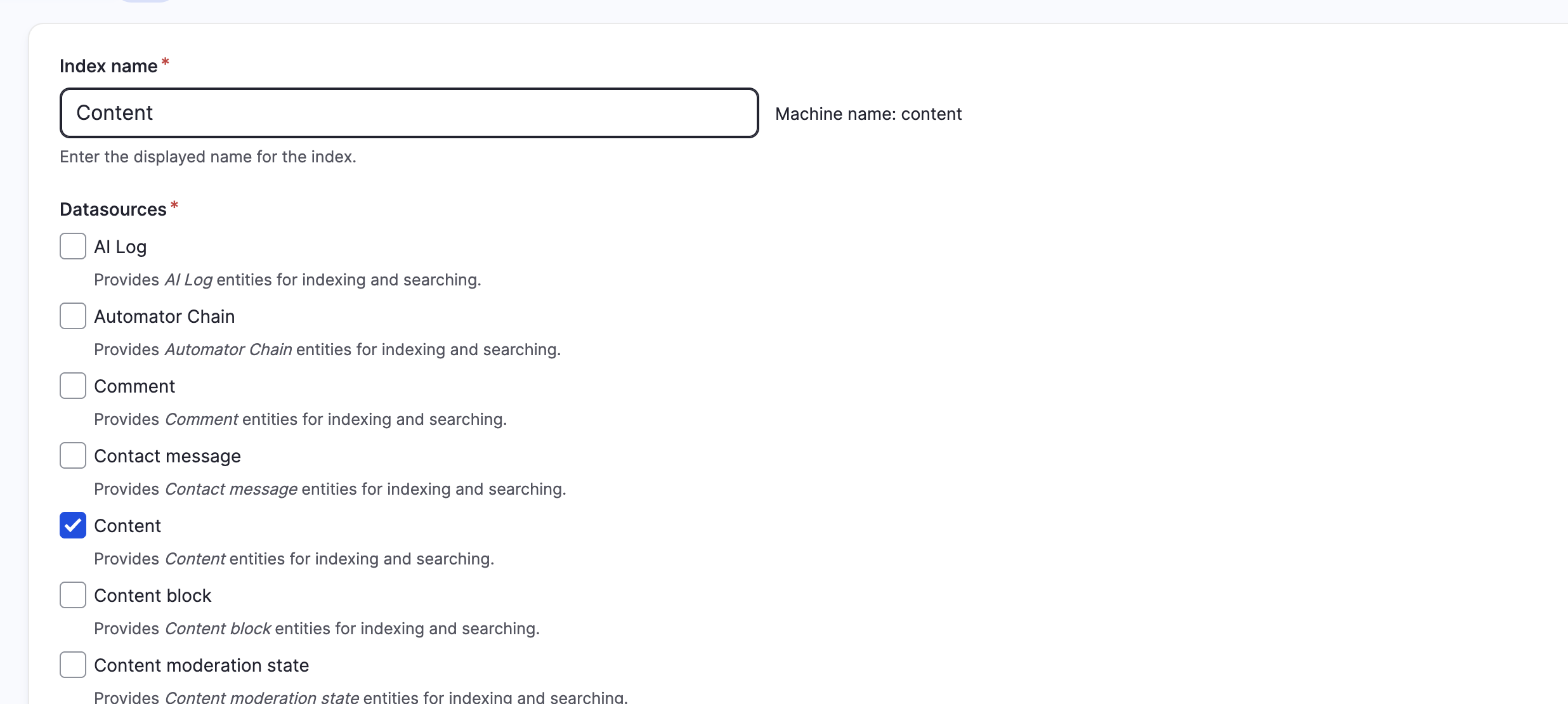

Configure which content types and fields should be indexed for semantic search.

Configure which content types and fields should be indexed for semantic search.

Index configuration:

- Index name:

Content(or any descriptive name) - Datasources: Check Content - This indexes your content entities (articles, pages, custom content types, etc.)

You can also select other entity types like Comments, Users, or custom entities if you want to make them searchable.

- Server: Select the

Searchserver you just created - Enabled: Check this box so the index can be used immediately

Click "Save" and you'll be taken to the Fields configuration.

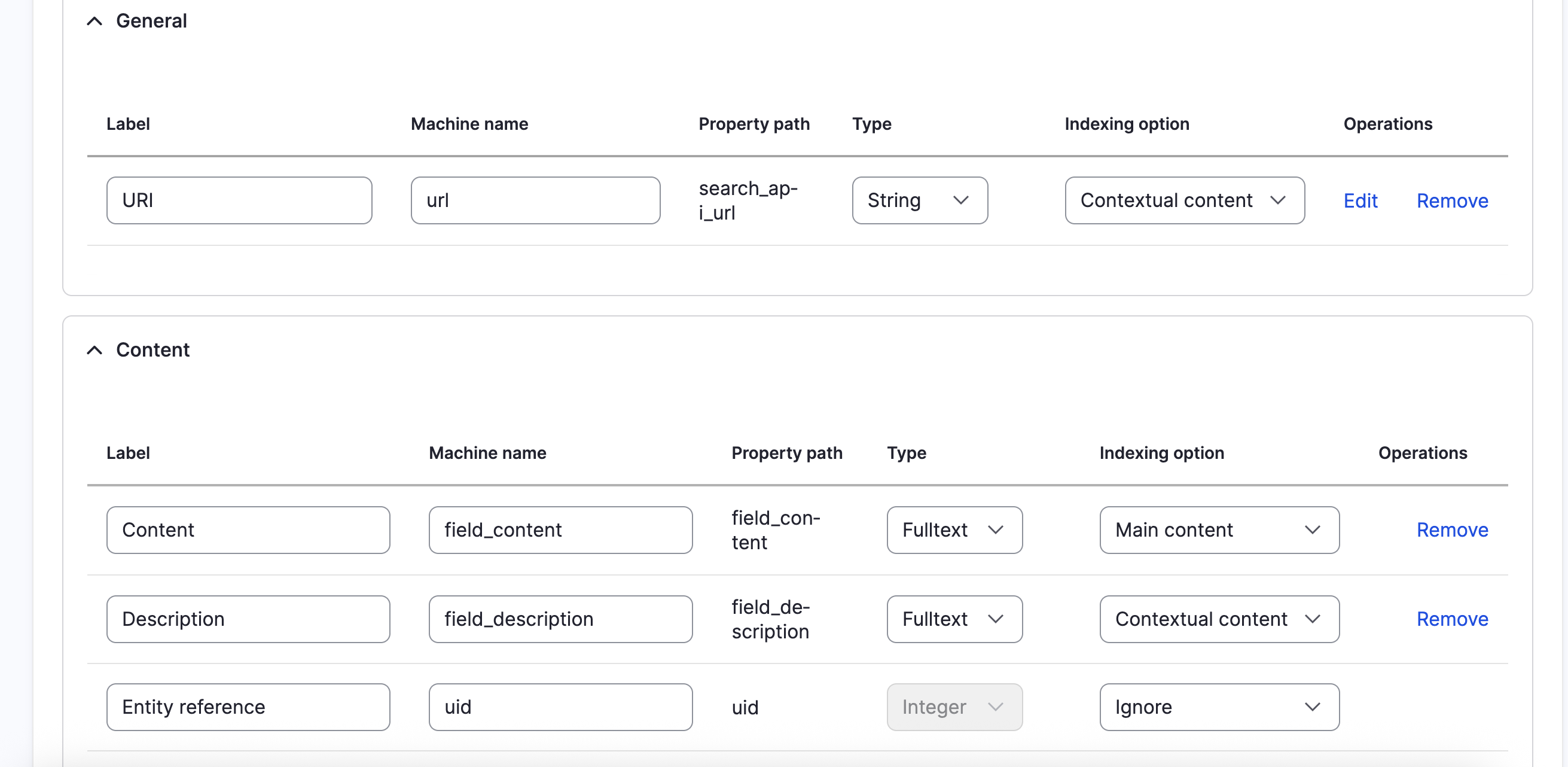

Configuring Fields to Index

Select which fields from your content should be included in the semantic search index.

Select which fields from your content should be included in the semantic search index.

The Fields tab shows which content fields will be converted into embeddings. You'll want to include:

General section:

- URI - The content URL (String type, "Contextual content" indexing option) - Useful for linking to results

Content section:

- Content (

field_content) - Your main content field (Fulltext, "Main content" indexing option) - This is the primary field for semantic search - Description (

field_description) - Content summary/description (Fulltext, "Contextual content") - Helps provide context

Why these indexing options?

- Main content: This field is the primary focus for search relevance

- Contextual content: These fields provide supporting context but aren't the main search target

- Fulltext: Indicates the field contains text that should be analysed and searched

You can add other fields like title, tags, or custom fields depending on what you want semantically searchable. The AI will generate embeddings from all fulltext fields combined.

Save your field configuration.

Step 7: Configuring Search Processors

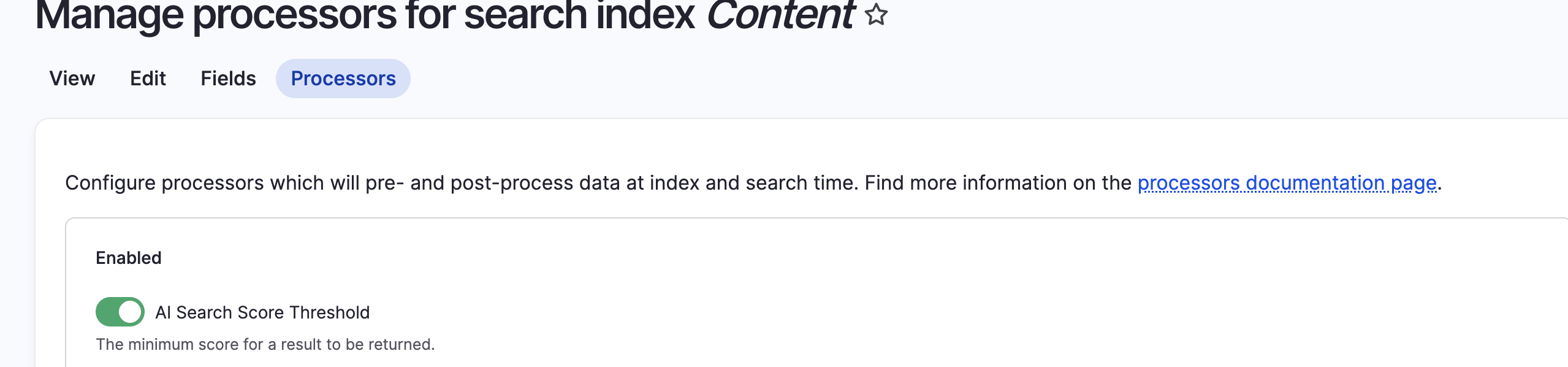

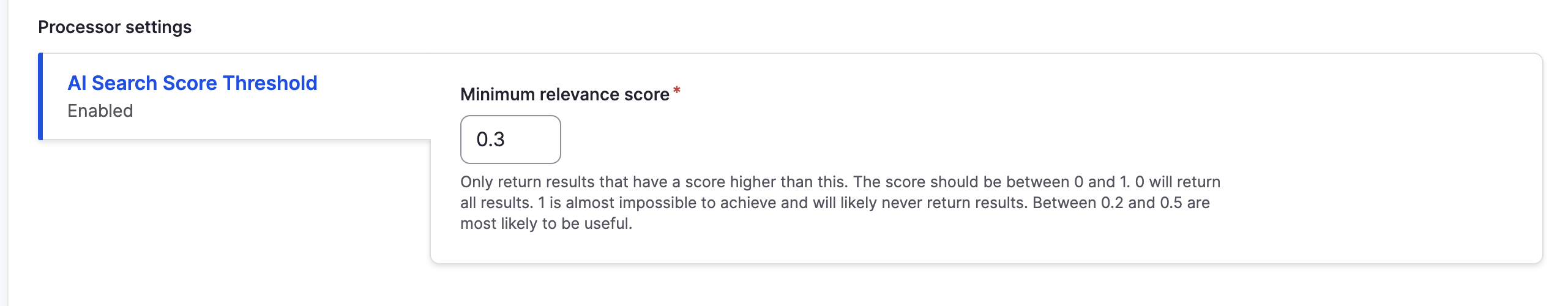

Processors modify how content is indexed and how searches are executed. For AI semantic search, there's one key processor to configure.

Click the "Processors" tab on your index:

Enable the AI Search Score Threshold processor to filter low-relevance results.

Enable the AI Search Score Threshold processor to filter low-relevance results.

Enable AI Search Score Threshold - This processor filters out results below a minimum relevance score.

Configure the minimum relevance score for search results.

Configure the minimum relevance score for search results.

Minimum relevance score: 0.3

This is crucial for semantic search quality. The score ranges from 0 (completely unrelated) to 1 (identical meaning).

Score guidelines:

- 0.0: Returns everything (too noisy)

- 0.2-0.5: Good range for most use cases - filters out unrelated content while keeping relevant results

- 0.3: A balanced starting point (our recommendation)

- 0.5+: More restrictive, may filter too much

- 1.0: Nearly impossible to achieve - would only return exact matches

Start with 0.3 and adjust based on your results. If you're getting too many irrelevant results, increase it slightly. If you're missing relevant content, decrease it.

Save your processor configuration.

Index Your Content

Now it's time to actually index your content and generate embeddings.

Click "Index now" button on your index page, or use Drush:

drush search-api:index content

What happens during indexing:

- Drupal loads your content entities

- Extracts the configured fields (content, description, URL, etc.)

- Sends the text to OpenAI's embedding API

- Receives back 1536-dimensional vectors

- Stores those vectors in Milvus with content IDs

- Marks content as indexed in Search API

For a few hundred content items, this might take a few minutes and cost a few cents in OpenAI API usage. You'll see progress in the Drupal status messages. Be mindful of API limits here: if hundreds of embeddings are being processed in quick succession, you might run into a few issues.

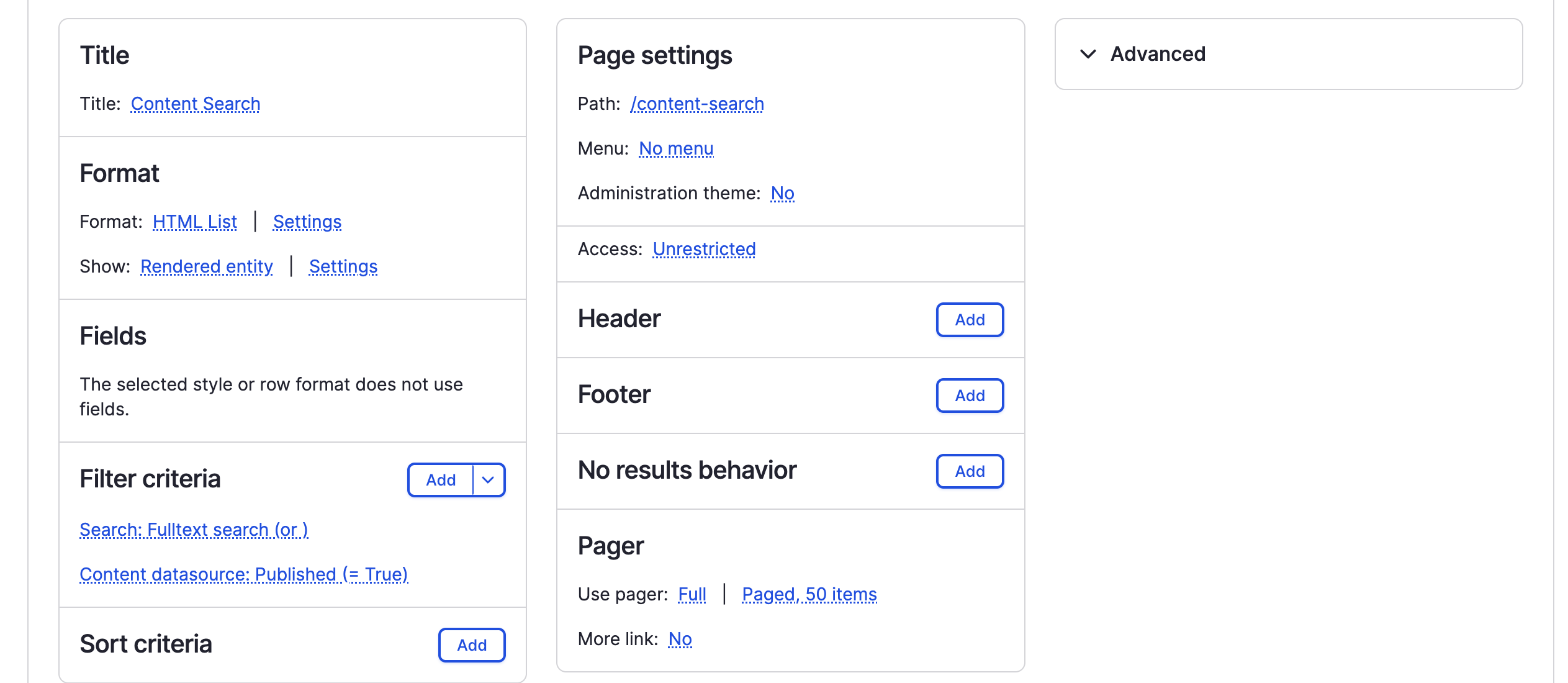

Step 8: Building the Search View

Now for the fun part - creating the actual search interface your users will interact with.

Navigate to Structure > Views (/admin/structure/views) and click "Add view."

Create a Views page that uses your semantic search index.

Create a Views page that uses your semantic search index.

View configuration:

Title section:

- Title:

Content Search

Format section:

- Format:

HTML List(orTable,Grid, whatever suits your design) - Show:

Rendered entity(displays full content teasers) orFields(for custom output)

Page settings:

- Path:

/content-search(users will visit yoursite.com/content-search) - Menu: Optional - add to a menu if you want it in navigation

- Access:

Unrestricted(or configure permissions as needed)

Filter criteria:

- Search: Fulltext search - This adds the search box for users to enter queries

- Content datasource: Published (= True) - Only show published content

Server:

- Select your

Searchindex (the semantic search one you created)

Save your view and visit /content-search on your site.

Step 9: Testing Your Semantic Search

Time to see the magic in action. Visit your search page and try some queries that demonstrate semantic understanding:

Test Queries to Try

- Synonym test: If you have content about "automobiles," try searching "cars" or "vehicles"

- Concept test: If you have a "getting started guide," try searching "beginner tutorial" or "how to begin"

- Natural language: Instead of keywords, ask questions like "how do I reset my password?"

- Context test: Search for concepts that are described but not explicitly named

What you should see:

- Results that are conceptually relevant even when exact words don't match

- Higher relevance scores (closer to 1.0) for more similar content

- Results that understand context and meaning, not just keyword frequency

Compare to Traditional Search

If you have a traditional keyword search on your site, run the same queries and compare:

- Semantic search finds content based on meaning

- Keyword search requires exact or partial word matches

- Semantic search handles synonyms, related concepts, and natural language much better

Tuning Relevance

If your results aren't quite right:

Too many irrelevant results: Increase the AI Search Score Threshold (try 0.4 or 0.5)

Missing relevant content: Decrease the threshold (try 0.2 or 0.25)

Specific content types matter more: Adjust field boosting in Search API to weight certain fields higher (title, tags, etc.)

Improving accuracy: Index more fields that provide context, use better content descriptions, ensure your content is well-written and clearly describes concepts

Next Steps & Optimisation

You now have working semantic search! Here are ways to enhance and optimise it:

Index Different Content Types

Add other datasources to your index:

- User profiles for people search

- Comments for discussion search

- Custom entities for specialised content

- Media entities for file/document search

Production Considerations

When you're ready to deploy:

Managed Milvus: Use Zilliz Cloud (Milvus managed service) instead of self-hosting

- No Docker container management

- Better performance and reliability

- Automatic backups and scaling

API Costs: Monitor your OpenAI usage

- Indexing costs are one-time per content item

- Updates only re-index changed content

- Search queries don't use embeddings API (vectors are already stored)

- Budget for initial indexing, then ongoing costs are minimal

Caching: Enable Drupal caching for search results

- Repeated identical queries return cached results

- No additional API calls or vector database queries

- Significantly faster response times

Incremental Indexing: Set up automatic indexing

- Use Search API's built-in indexing on content save

- Or configure cron to index new content periodically (see my blog post about cron)

- Keeps your search up-to-date without manual intervention

Conclusion

Semantic search transforms how users find content on your Drupal site and whether we like it or not, it will be the new expectation in web interaction going forward. Instead of matching keywords, you're matching meaning. Users ask questions naturally and get relevant answers, even when the words don't align perfectly. For other ways to enhance your Drupal site with AI, see our State of Drupal in NZ article discussing the evolution of Drupal's capabilities.

What you've built today:

- Milvus vector database running locally

- Drupal AI module configured with OpenAI embeddings

- Search API index using AI-powered semantic search

- A search view that users can interact with

- Understanding of how embeddings and vector similarity work

This same foundation powers AI assistants, recommendation engines, and content discovery features.

The Drupal AI ecosystem is growing rapidly. The patterns you've learned here - embeddings, vector databases, AI providers - apply to many other AI features you might want to implement. Check out my post on 5 AI Quick Wins for Drupal for more ideas on what you can build with these tools.

Additional Resources

- Drupal AI Module Documentation

- Milvus VDB Provider Guide

- Search API Documentation

- OpenAI Embeddings Guide

- YouTube walkthrough

Need Help Implementing Semantic Search?

While this guide walks you through the technical setup, implementing semantic search in a production environment with your specific content structure, performance requirements, and infrastructure can be complex. Our team specializes in advanced Drupal development and AI integration, helping organizations build intelligent search experiences that scale.

We've implemented semantic search for enterprise Drupal sites across New Zealand. Check out our case studies to see our work, or explore our guide on Drupal queue workers for handling large-scale content processing efficiently.